Dr. Sanchita Ghose Received NSF ERI Grant to Develop SoundEYE: AI-Driven Sensory Augmentation System for Visually Impaired Individuals through Natural Sound

Dr. Sanchita Ghose, Assistant Professor of Computer Engineering, received an NSF- Engineering Research Initiation (ERI) Award of a total $200,000 for two years (2024-2026) to develop an AI-Driven Sensory Augmentation System for Visually Impaired Individuals through Natural Sound.

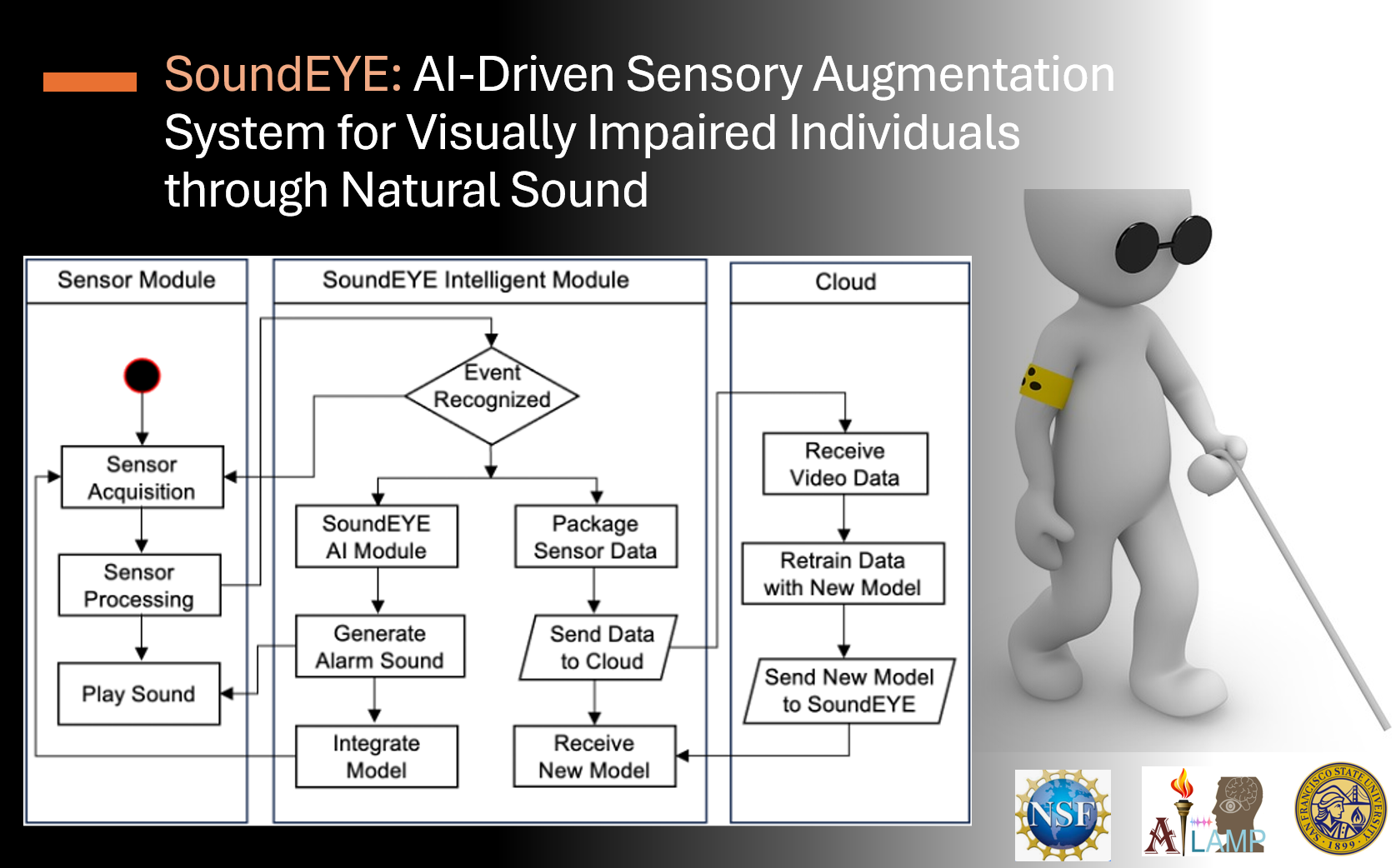

The goal of this project is to improve the independence, accessibility, and overall safety and well-being of the visually impaired person (VIP) by developing an AI-based Sensory Augmentation System that can leverage the power of natural representative sound and technology. This research hypothesizes that representable synchronous sound from visual recognitions will have a significant impact on augmenting visual perception than usual auditory cues/captioning. This research will enhance spatial cognition and streamline navigation, increase personal freedom and empowerment, and ensure the well-being and health of individuals. The project will address challenges related to providing content-wise relevant and augmented sound alerts of the most immediate event through analyzing a complex scene with the presence of multiple sound sources and generating representable synchronous sound through capturing audio-visual synchronization with moving objects. Thus, automatically generating representable sound for critical situations can have a significant impact on VIPs to comprehend and deal with real-world events. Additional deliverables of this project will include engineering research experiences for students from a wide spectrum of backgrounds, including under-represented minority groups.

The proposed framework can also contribute to developing cyber-physical/IoT systems for human sensory augmentation using Advanced AI Techniques. The proposed visual-to-audio synthesis model has the potential to impact diverse applied fields e.g., cyber-physical system design in safety and security applications; Multimodal Signal processing and system integration in developing next-generation Multimedia systems, Engineering AI Systems development for enhanced-embedded battlefield intelligence with the necessary situational awareness.